Posts

category: Compositing

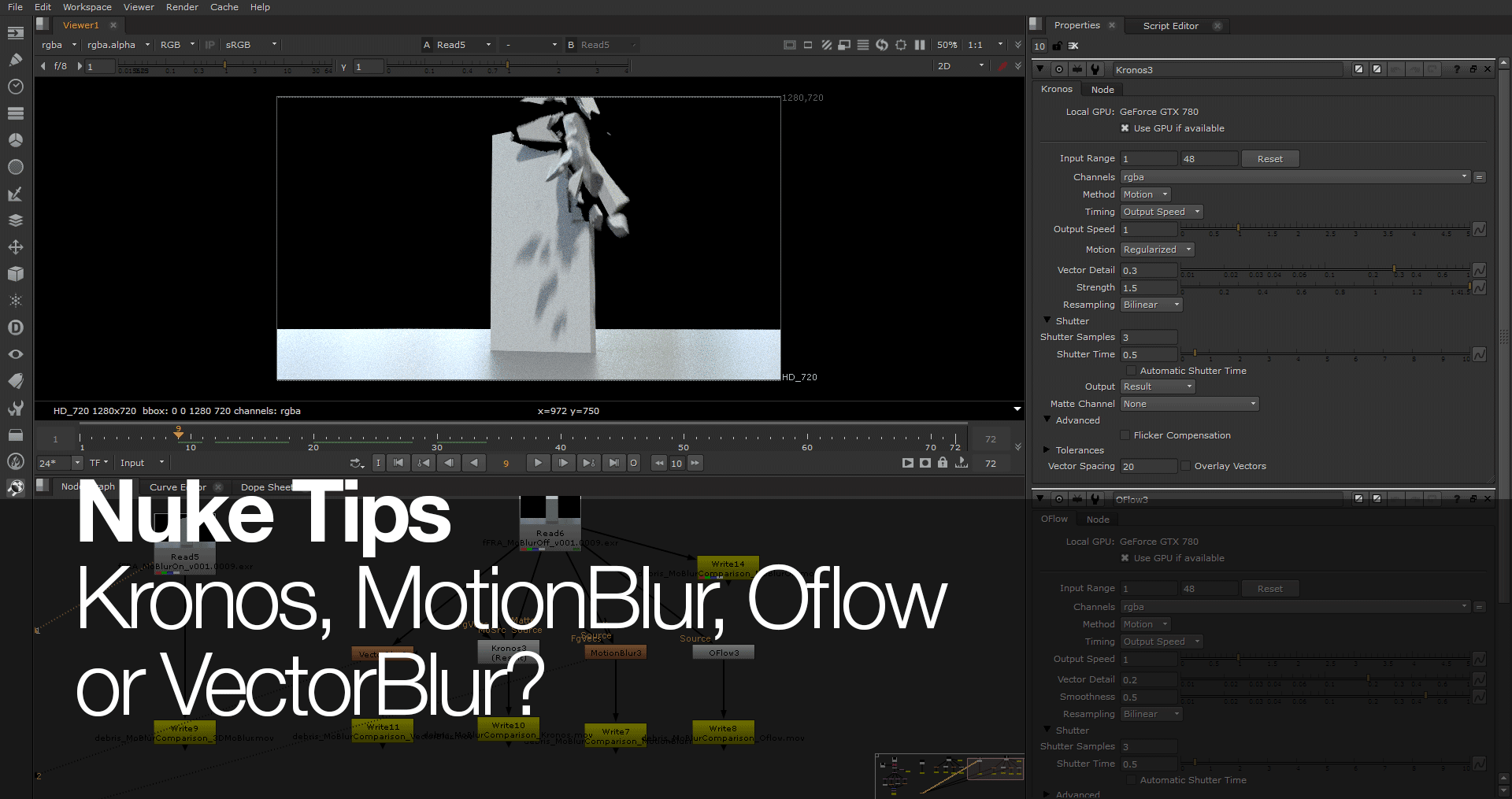

Nuke Tips – Kronos, MotionBlur, Oflow, or VectorBlur?

Which motion blur nodes to use for FX elements? Kronos, MotionBlur, Oflow or VectorBlur? Read on to find out the difference between 3D motion blur or adding post motion blur in Nuke.

Nuke 1001 – Practical Compositing Fundamentals (3DCG)

Guide to practical compositing fundamentals in Nuke for 3DCG project.

Recreate After Effects Settings in Nuke (and vice versa)

Recreate After Effects Settings in Nuke? Why? Tell me why?

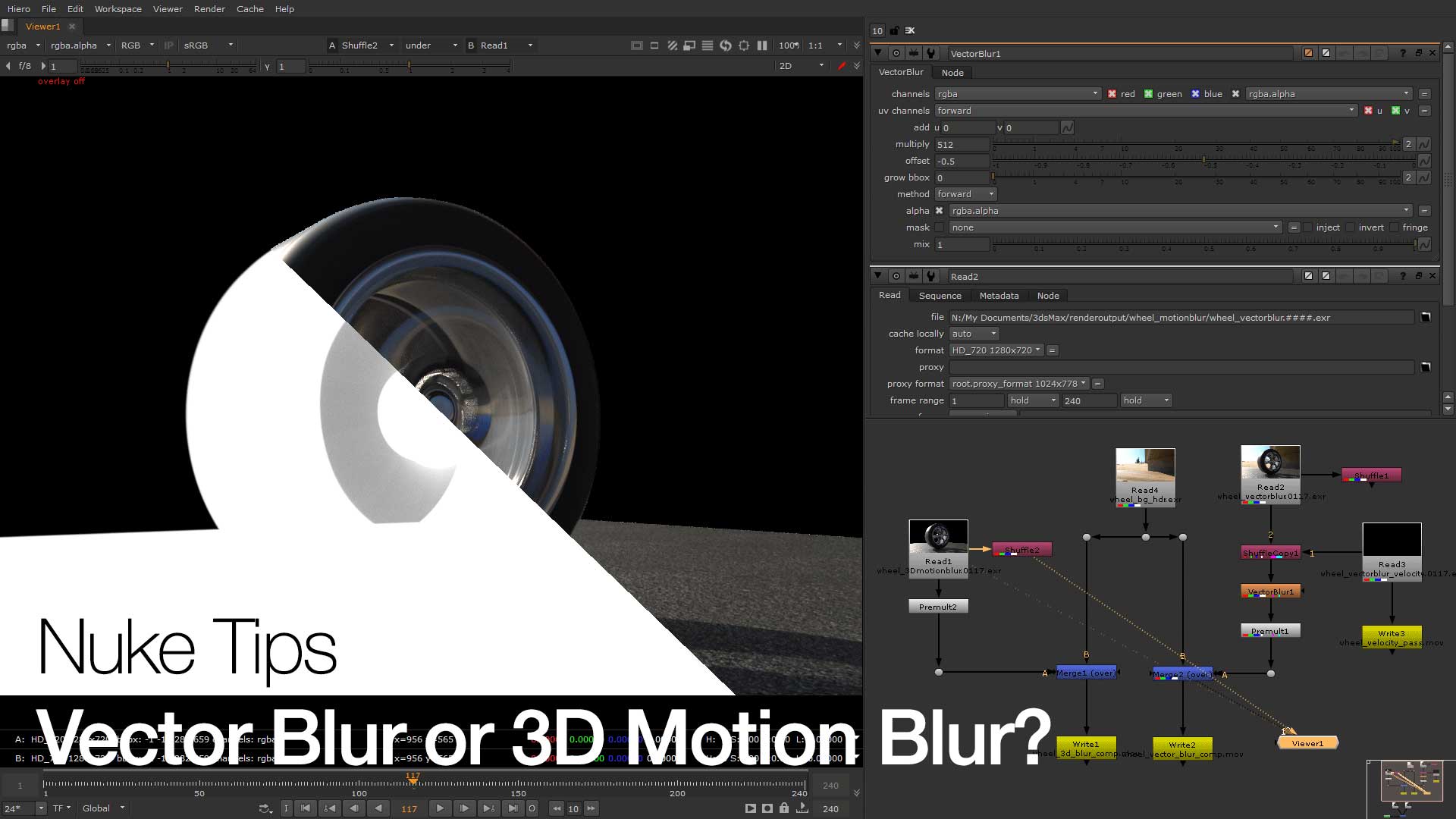

Nuke Tips (of the month) – Vector Blur or 3D motion blur?

Everything is so blurry between Vector Blur or 3D Motion Blur in this Nuke Tips.

Quick Intro to 2D Tracking in After Effects and Nuke

A quick intro to 2d tracking in After Effects and Nuke for beginners to both compositing and matte painting work.

Incriminate Behind the Scenes – Part 1

Part one of Incriminate behind the scenes covering the initial pre-production and software. Also include outtakes video.

H.264 Compression Comparison

H.264 compression is tricky when exporting your beauty render from Nuke with minimal loss. Comparison GIF and guidelines in the article.

A guide to Nuke Non-Commercial

Want to learn Nuke and wondering what’s the catch with Nuke Non-Commercial? This guide covers the limitations and alternative solution.

Intro to Octane Render Passes for 3dsmax

Intro to Octane Render Passes for 3dsmax. This will cover the essentials passes that is useful for compositing in application like Nuke.