Posts

category: Nuke Tips

Nuke Tips – Procedural Film Scratch (Creating Gizmo) Part 1

Learn how to create gizmo to generate procedural film scratch in Nuke in this three-part tutorials. Part 1 covers the introduction and walkthrough of creating a basic gizmo.

Nuke Tips – Matching Colour with Grade Node

Matching colour with grade node, this article covers the process of.

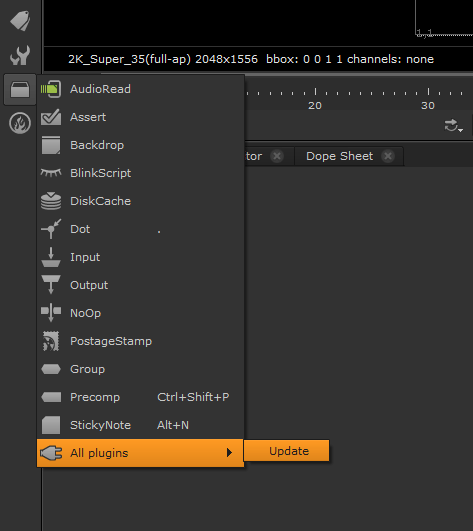

Nuke Tips – Easy Access to Gizmo

A guide to easy access to gizmo in Nuke.

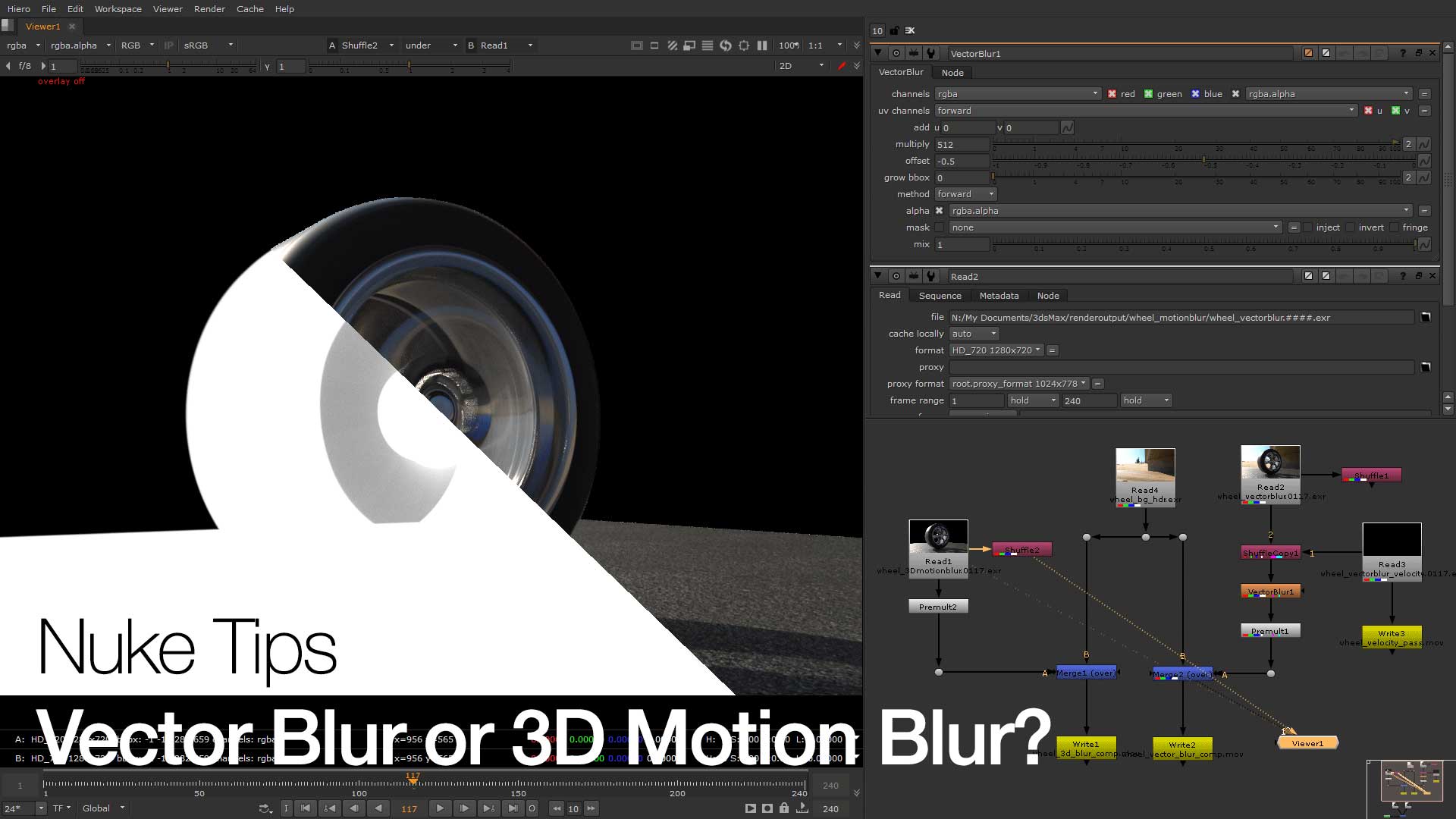

Nuke Tips (of the month) – Vector Blur or 3D motion blur?

Everything is so blurry between Vector Blur or 3D Motion Blur in this Nuke Tips.

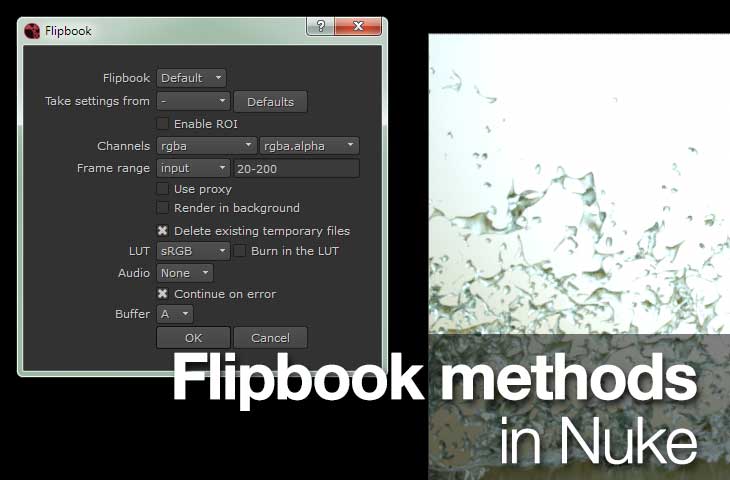

Nuke Tips – Flipbook Methods in Nuke

This post explores the many flipbook methods in Nuke.

Nuke Tips – Noise in Nuke

Quick rundown of the noise node in Nuke and its usage in typical compositing job.

Nuke Tips – Render Order

A case study of using Render Order in Nuke and explanation of its usage in a pipeline.

Nuke Tips – Masking in Nuke

This post explains what you can do and not with masking in Nuke. Include samples and project files for your exploration.

Nuke Tips – Flare Node

Exploring the flare node in Nuke and the practical usage in your daily Nuke compositing.