Posts

category: FX

CR Devil May Cry 4 – FX Tasks List

My last project as an FX artist which is CR Devil May Cry 4 pachislot before I embarked my journey as a Python developer for financial technology industry!

Learning Houdini for Project DQ

Lengthy post on my experience in learning Houdini for Project DQ from a complete newbie! Might be useful or complete bollocks depending on your interest though.

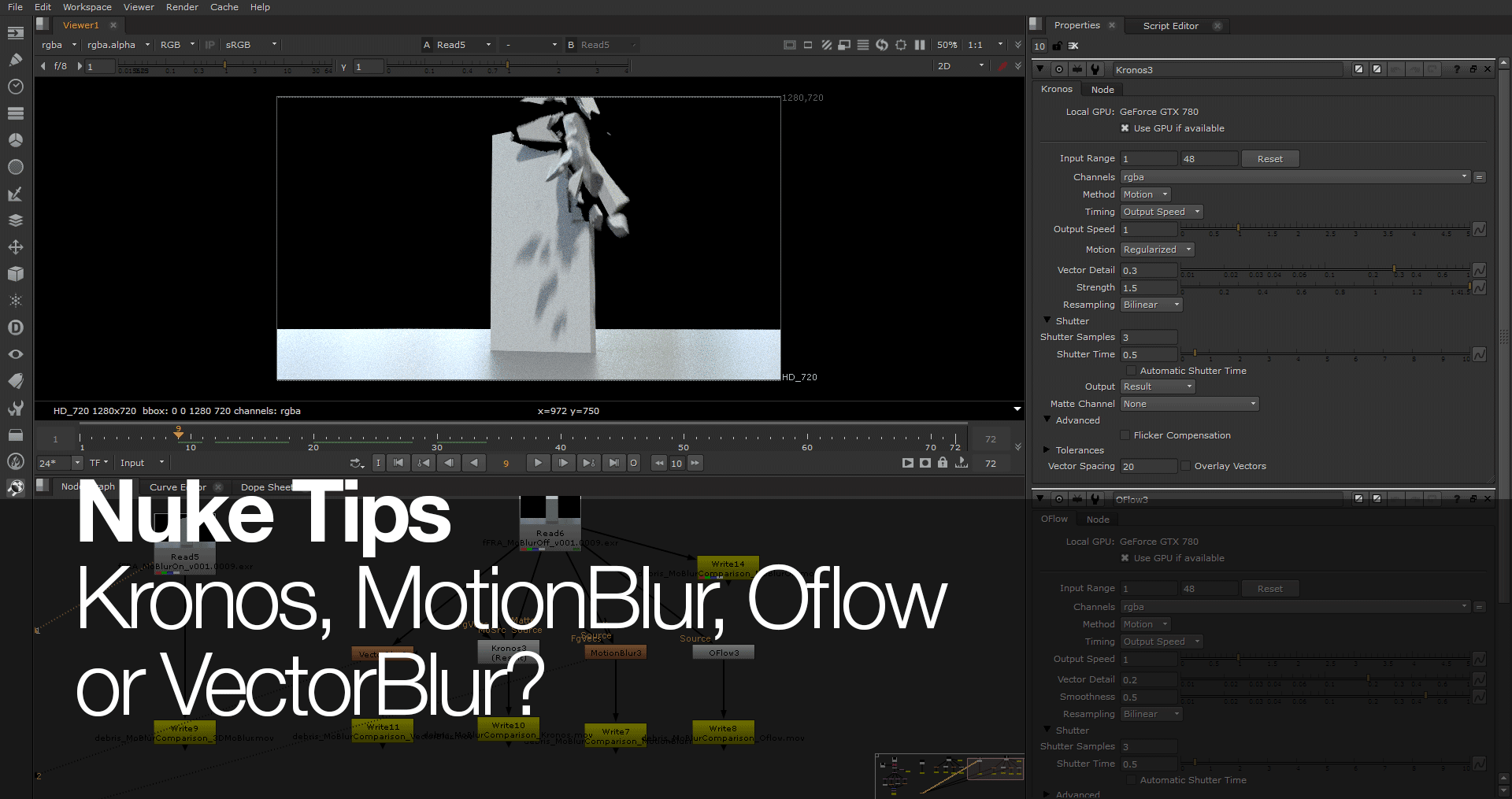

Nuke Tips – Kronos, MotionBlur, Oflow, or VectorBlur?

Which motion blur nodes to use for FX elements? Kronos, MotionBlur, Oflow or VectorBlur? Read on to find out the difference between 3D motion blur or adding post motion blur in Nuke.

Blood FX using RealFlow

This tutorial covers the creation of Blood FX using RealFlow as seen in Gantz: O.

Back to Top